Yesterday I discovered that some of the nodes has failover-functionality, so that if the dedicated BMC NIC fails, it switches over to the normal NIC. Unfortunately it doesn’t have a fallback-solution (if/when the BMC NIC comes back online); once the failover has been triggered, it stays that way until the BMC power-cycles. When redoing the network-layout, this caused a lot of BMCs to failover, hence trying to request management IP on the PROD/DEV-network, which, of course, caused it to not get any IP at all.

Today I powered down all those nodes, and pulled the power-cord, making the BMCs lose power. After about 5-10 seconds off, I turned them on again. So far, so good. Loads of BMCs came up correctly on the management-network. However, a large part still tried to get IP on the PROD-network. I couldn’t seem to figure out why; the switches are all properly configured. And then I found the pattern;

root@portal-ecs1:/etc/dsh/group# for host in $(cat prodcluster); do if ! ping -c1 -t2 "$host-mgmt"|grep -qi "bytes from"; then echo "$host-mgmt"; fi; done cn010-mgmt fepdimutrk3-mgmt fepemcal0-mgmt fepemcal1-mgmt fepemcal2-mgmt fepemcal3-mgmt fepemcal4-mgmt fephltout2-mgmt feppmd1-mgmt fepsdd0-mgmt fepsdd1-mgmt fepsdd2-mgmt fepsdd3-mgmt fepsdd4-mgmt fepsdd5-mgmt feptofa00-mgmt feptofa02-mgmt feptofa04-mgmt feptofa06-mgmt feptofa08-mgmt feptofa10-mgmt feptofa12-mgmt feptofa14-mgmt feptofa16-mgmt feptofc00-mgmt feptofc02-mgmt feptofc04-mgmt feptofc06-mgmt feptofc10-mgmt feptofc12-mgmt feptofc14-mgmt feptofc16-mgmt feptpcco17-mgmt feptrd14-mgmt |

All these (with _maybe_ a few exceptions) is running the same motherboard, with the same IPMI/BMC-addon card — both from Tyan. This addon-card is needed to activate the BMC-features. The sad thing, though, is that it seems to only send it’s DHCP-requests out the main NIC, that is LAN1/eth1 — regardless if it has links on the two other NICs. The ironic thing is that the source-mac of the DHCPDISCOVERs, is actually the one for eth0 (which is the dedicated management-NIC). So, with no help on Tyan’s homepages, and none of the available change-the-NIC-to-use-through-ipmitools-tricks working, I decided to go the somewhat easy way, even though it’s not ideal;

Make eth1 the dedicated management-NIC, and use eth2 for the normal network. I don’t like using a Gbps-NIC for management, but it’s better than spending ages to figure out how to get it to request IPs from the management-NIC. There has been some suggestions to flash the BIOS, NIC and IPMI/BMC-addon card, but this involves a lot of risk of bricking stuff, so I won’t go down that route. Not for now, at least.

So, tomorrow I’ll change eth1 to eth2 on ~30 nodes. Nice spending time on something as useful as this! :-D

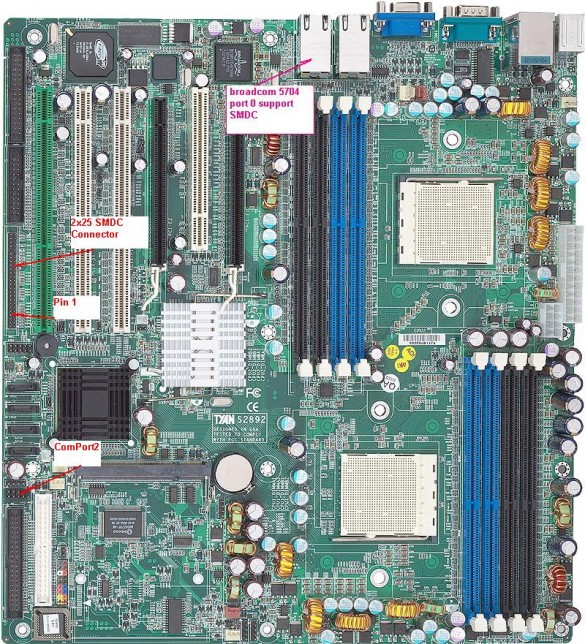

Update: According to the picture below, it’s actually true; only eth1/LAN1 has the ability to use/have IPMI/BMC. That’s kinda LOL, considering you’ll be wasting a Gbps-NIC, when you have a 100Mbps-NIC available. GG, Tyan!